|

How to Run Ollama as a Windows Service with AlwaysUp |

Automatically start Ollama server in the background whenever your computer boots.

Ensure that your LLMs are accessible 24/7 — without anyone having to log on first. Set it and forget it!

Ollama is an open-source tool that allows you to run LLMs locally on your computer.

It's a great alternative to

ChatGPT,

Gemini,

and the other engines hosted by the large tech companies — especially for use cases prioritizing privacy and reduced cost.

To install Ollama server as a 24x7 Windows Service:

-

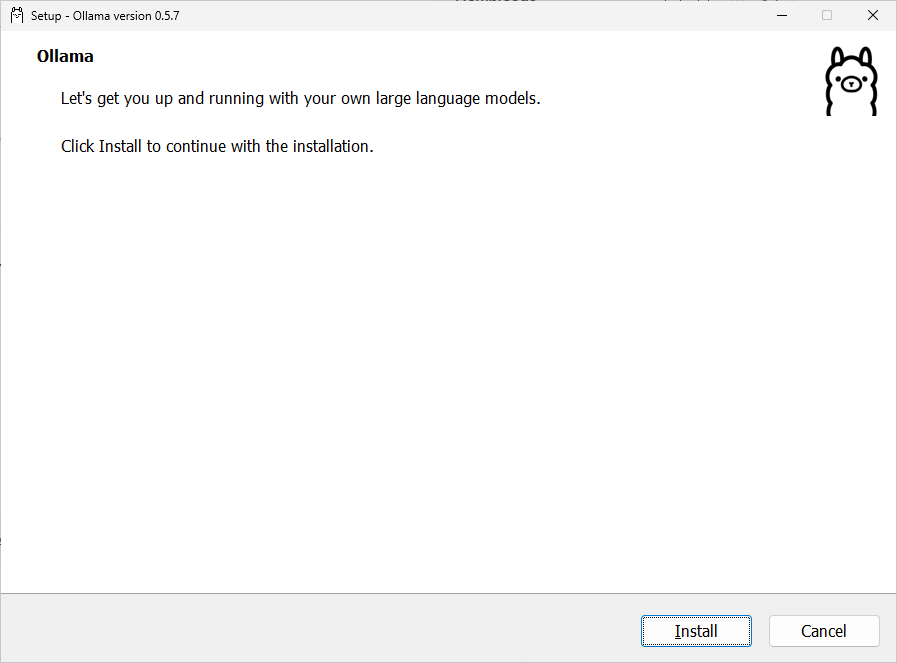

Download and install Ollama, if necessary:

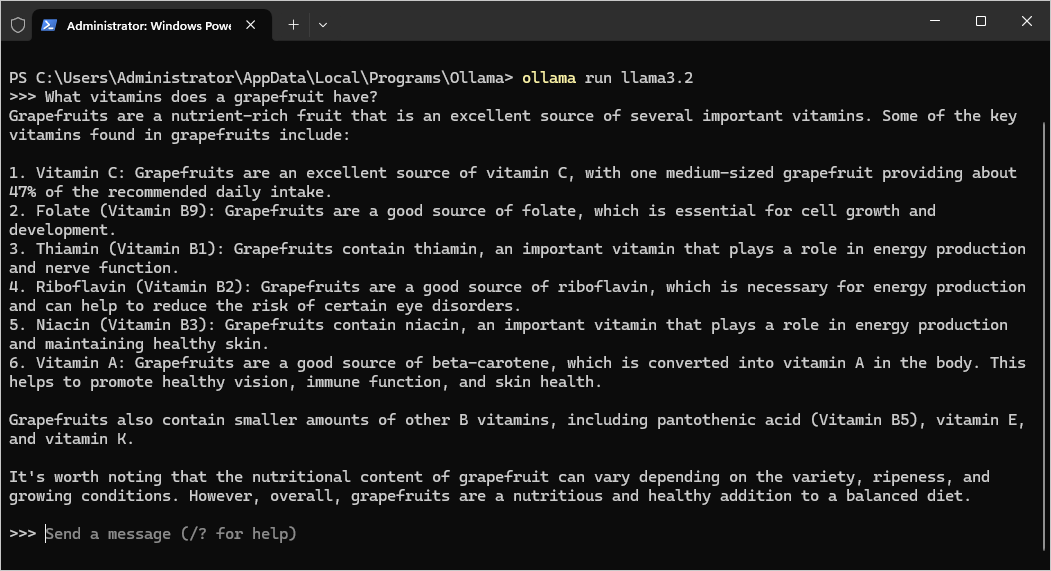

Ensure that you can interact with the models from the command line and that everything works as expected.

As you can see here, we had no trouble chatting with

Meta Llama 3.2 about our

favorite winter fruit

on our Windows 2025 server:

Note that by default, Ollama installs itself in:

C:\Users\<USER-NAME>\AppData\Local\Programs\Ollama

where <USER-NAME> is the name of your Windows account. We'll refer to that folder throughout this tutorial.

-

Next, download and install AlwaysUp, if necessary.

-

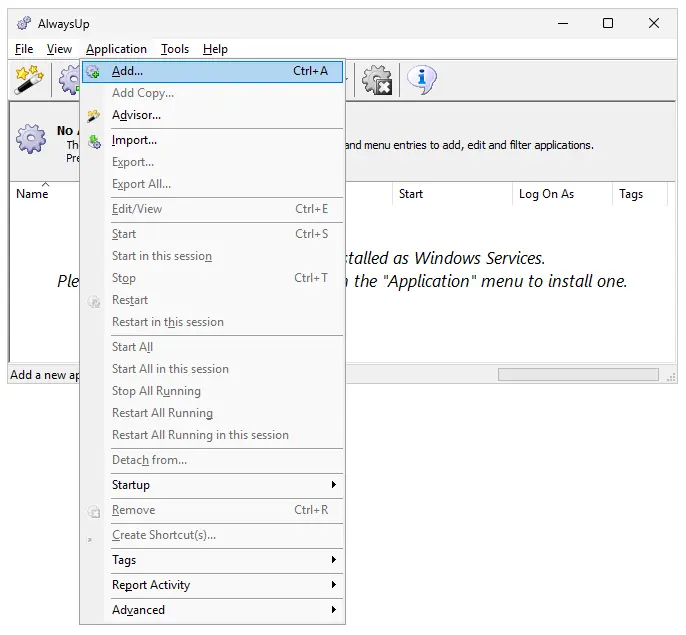

Start AlwaysUp.

-

Select Application > Add to open the Add Application window:

-

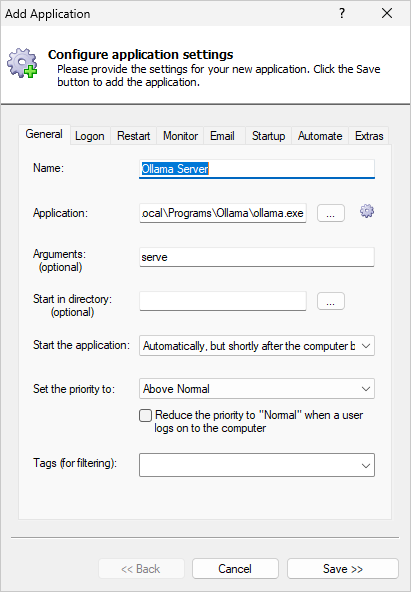

On the General tab:

-

In the Application field, enter the full path to the Ollama server executable, ollama.exe.

Since we're logged in to the Administrator account, that's

"C:\Users\Administrator\AppData\Local\Programs\Ollama\ollama.exe"

on our machine.

-

In the Arguments field, enter serve.

That parameter tells the Ollama executable to run in server mode, listening to requests and launching LLMs.

-

In the Start the application field, choose Automatically, but shortly after the computer boots.

With this setting, the Ollama will start a couple of minutes after boot — once all the machine's critical

networking services are fully initialized.

-

Next, set the priority to Above Normal.

Doing so will ensure that Ollama is given preference when Windows is

dolling out CPU resources

to the processes running on your PC.

-

And in the Name field, enter the name that you will call the application in AlwaysUp.

We suggest Ollama Server but you can specify another name if you like.

-

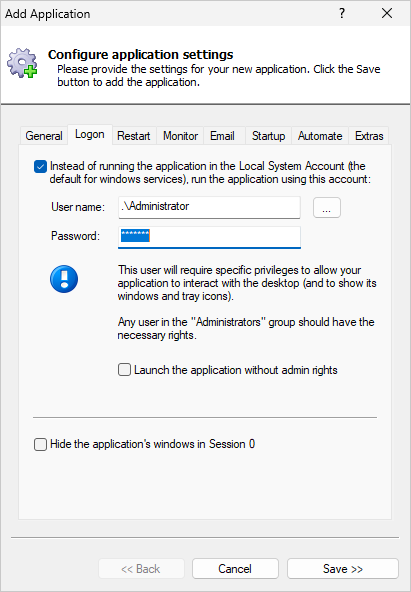

Move to the Logon tab and enter the user name and password of the account where you installed and run Ollama.

-

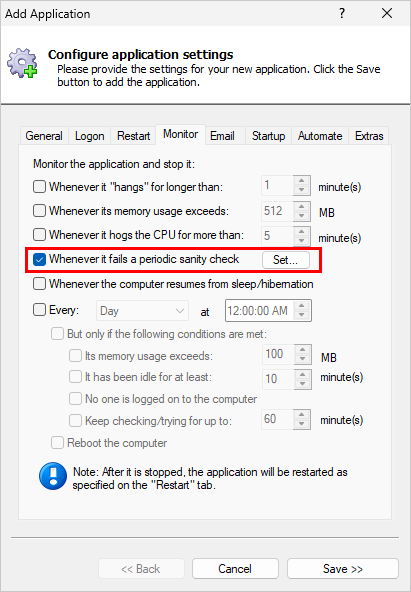

Switch to the Monitor tab. Here, we'll tell AlwaysUp to automatically restart Ollama

if the server ever stops accepting incoming TCP/IP connections.

-

Check the Whenever it fails a periodic sanity check box and click the Set button on the right:

-

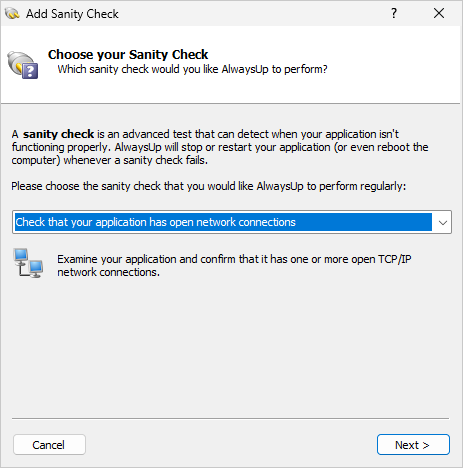

In the Add Sanity Check window, select the Check that your application has open network connections

option and click Next to proceed:

-

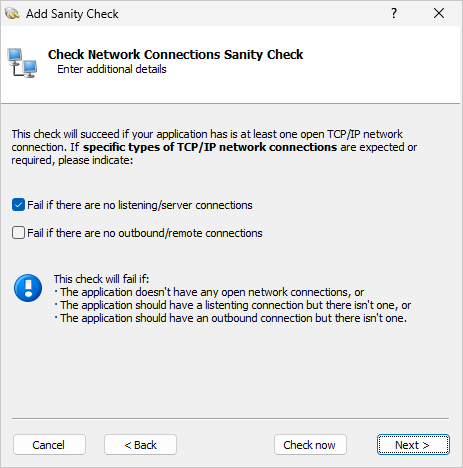

The next page allows you to specify what kind of network connections to look for.

Since Ollama is a server that must always have a listening connection, check the

Fail if there are no listening/server connections box.

Click Next to continue.

-

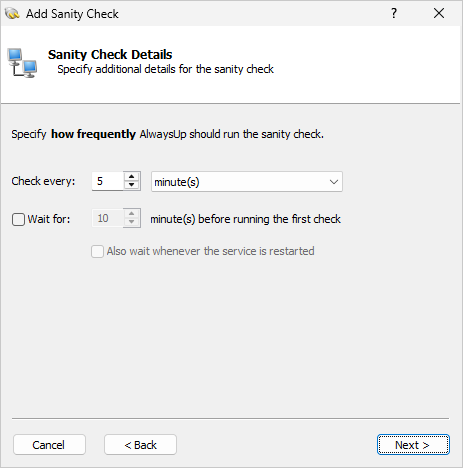

At this point, specify how often AlwaysUp should check that Ollama has a listening connection.

Every 5 minutes should be good enough but feel free to adjust as you see fit:

After you're done, click Next to move on.

-

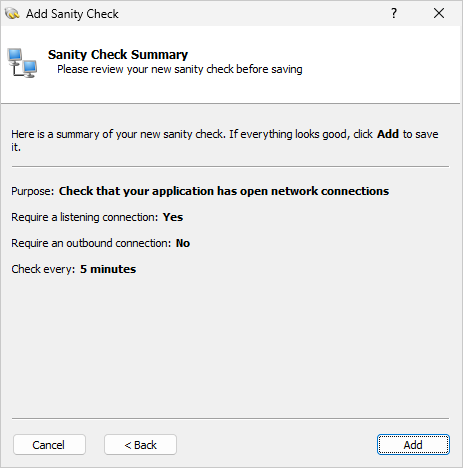

And finally, confirm your settings:

If everything looks good, click Add

to record your new sanity check and return to the Monitor tab.

-

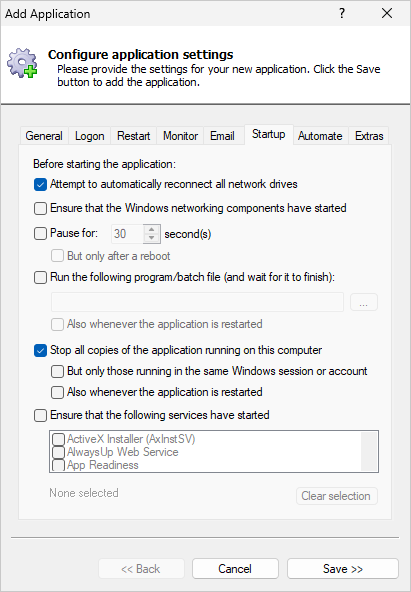

Switch to the Startup tab.

Ollama server will fail to start if another instance is already running.

To ensure that the copy launched by AlwaysUp will be viable,

AlwaysUp should first terminate any other instances of Ollama running on the machine.

Check the Stop all copies of the application running on this computer box to make that happen:

-

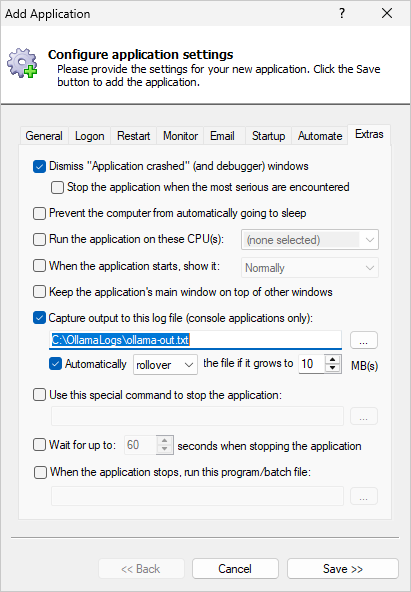

When installed as a Windows Service, Ollama will run invisibly in the background

(on the isolated Session 0 desktop).

Therefore, you won't see a console window nor have access to text logged to the console.

If you'd like to capture the console output to a text file:

-

Switch to the Extras tab.

-

In the Capture output to this log file field, enter the full path to the text file that should record the console output.

-

And while you're here, specify what should happen to the output file if it grows very large.

-

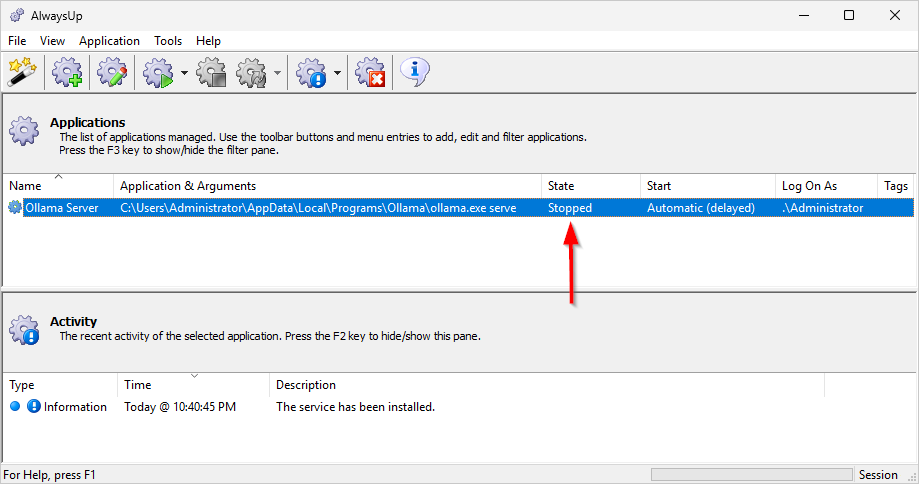

We're done configuring Ollama as a Windows Service so click the Save button to record your settings.

In a couple of seconds, an application called Ollama Server (or whatever you called it) will show up in the AlwaysUp window.

It is not yet running though and the state will be "Stopped":

-

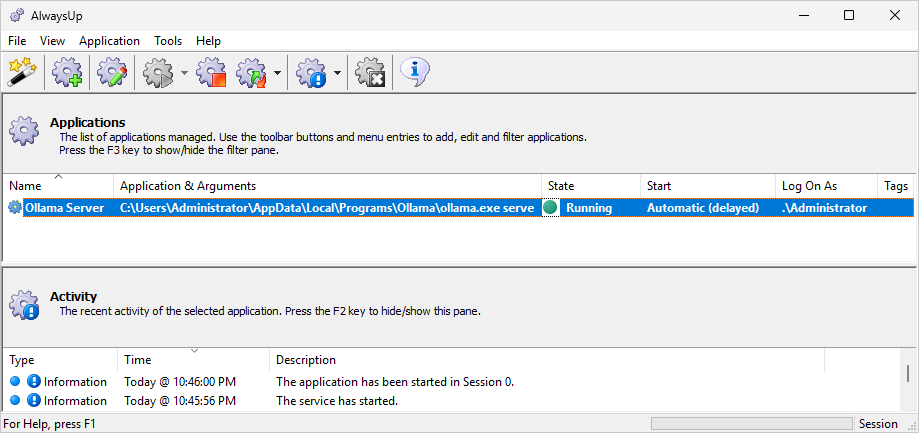

To start Ollama from AlwaysUp, choose Application > Start "Ollama Server". In a few seconds, the status will change to "Running"

and Ollama server will be running in the background:

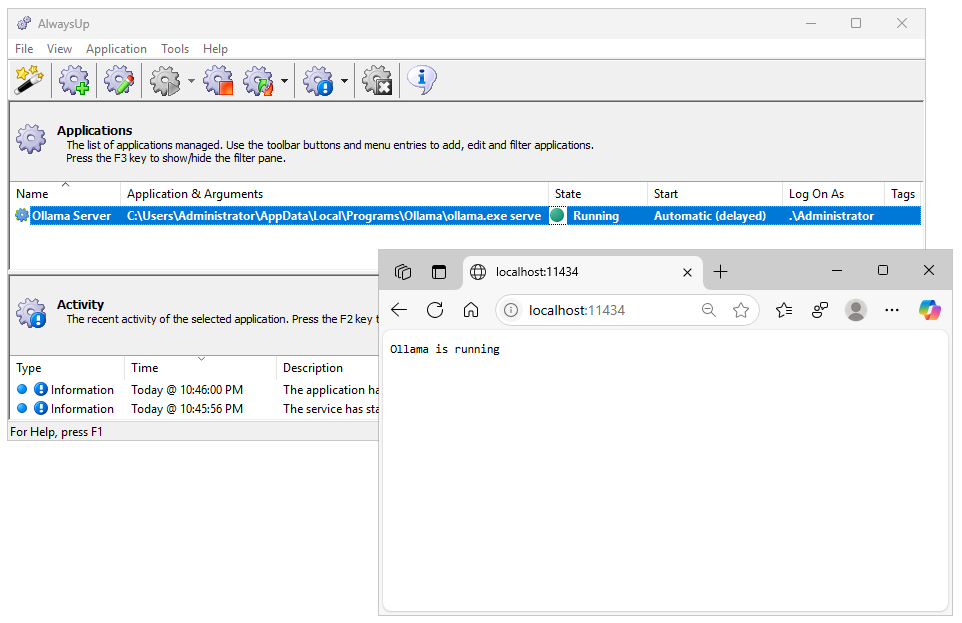

At this point, you should ensure that Ollama is working as expected. Is it responding to client requests?

Please run the Ollama client from a command prompt and make sure that your LLM is launched.

Also, check that you get a positive response when you visit Ollama's page in your browser (http://localhost:11434/):

-

That's it! Next time your computer boots, Ollama server will start up immediately, in the background, without anyone needing to log on.

Please restart your PC now and test that everything works as expected after Windows comes back to life.

And please feel free to edit your Ollama services in AlwaysUp and explore the many other settings that may be appropriate for your environment.

For example, send an email if Ollama crashes or stops, setup a weekly restart to cure niggling memory leaks, and much more.

Ollama not working properly as a Windows Service?

Consult the AlwaysUp Troubleshooter —

our online tool that can help you resolve the most common problems encountered when running an application as a windows service.

From AlwaysUp, select Application > Report Activity > Today to bring up an HTML report detailing the interaction

between AlwaysUp and Ollama.

The AlwaysUp Event Log Messages page explains the messages that may appear.

Browse the AlwaysUp FAQ

for answers to commonly asked questions and troubleshooting tips.

Contact us and we'll be happy to help!

|

Over 97,000 installations, and counting!

|

|

|

Rock-solid for the past 20+ years!

|

Fully Compatible with 160+ Applications...

|